December 17, 2025

PPC & Google Ads Strategies

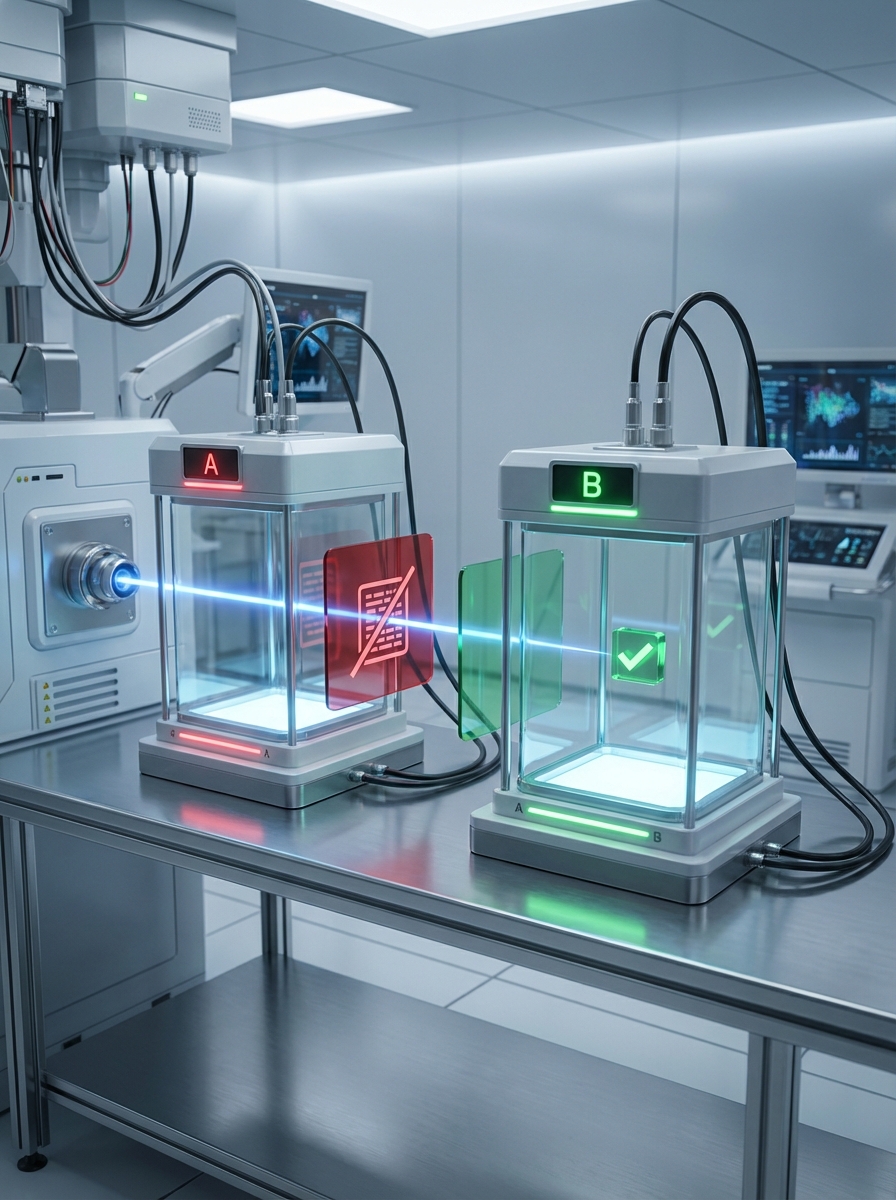

The Negative Keyword Testing Lab: Scientific A/B Testing Methodologies That Prove What's Actually Working

You've added hundreds of negative keywords to your Google Ads campaigns, but here's the critical question most PPC managers never answer: How do you actually know your negative keyword strategy is working?

Why Most Negative Keyword Strategies Fail the Scientific Test

You've added hundreds of negative keywords to your Google Ads campaigns. You've reviewed search term reports religiously. You've even automated parts of the process. But here's the critical question most PPC managers never answer: How do you actually know your negative keyword strategy is working?

The uncomfortable truth is that most negative keyword management operates on assumptions rather than evidence. You add a term that looks irrelevant, assume it's saving budget, and move on. But without rigorous testing, you're making decisions in the dark. Some of those "irrelevant" terms might have converted. Some of those "obvious" negatives might have been blocking your best prospects.

This is where scientific A/B testing methodologies transform negative keyword management from guesswork into a data-driven discipline. By treating your negative keyword lists as hypotheses that require validation, you can prove what's actually working and quantify the real impact on your campaigns. This approach doesn't just improve performance—it builds a systematic framework for continuous optimization that gets smarter over time.

Building Your Testing Foundation: The Scientific Method for PPC

Before you can run meaningful tests on your negative keywords, you need to establish a proper experimental framework. The scientific method that drives breakthrough discoveries in laboratories around the world applies equally well to your Google Ads account—you just need to translate the principles into PPC terms.

Step 1: Developing Testable Hypotheses

Every negative keyword test should begin with a clear hypothesis. This isn't just "I think this term is irrelevant." A proper hypothesis for negative keyword testing includes three components: the specific term or category you're testing, the expected impact on performance metrics, and the reasoning behind your expectation.

For example: "Adding 'free' as a broad match negative keyword will reduce wasted spend by 15-20% without decreasing qualified lead volume, because our search term data shows 'free' queries have a 0.3% conversion rate compared to our 4.2% account average, and our service requires paid subscription."

This specificity matters because it gives you clear success criteria. You're not just testing whether to add a negative—you're testing whether it delivers the predicted improvement without unintended consequences. This is the foundation of controlled experiment approaches that protect your budget while validating optimization strategies.

Step 2: Establishing Control and Treatment Groups

The core of any valid A/B test is comparing two identical groups where only one variable changes. For negative keyword testing, this means creating campaign structures that allow for true comparison.

The most rigorous approach uses Google Ads Campaign Experiments feature. You create an experiment that applies your negative keyword changes to 50% of your auction traffic while the control group continues without those negatives. This cookie-based split ensures each user sees consistent treatment, eliminating crossover contamination.

If Campaign Experiments aren't feasible (perhaps you're testing across multiple accounts or need more granular control), you can use campaign duplication with careful traffic splitting. Create two identical campaigns with identical targeting, budgets, and bids. Apply your negative keywords to Campaign B (treatment) while Campaign A (control) runs unchanged. Use ad scheduling or geographic splits to ensure they're not competing in the same auctions.

The critical rule: Your control and treatment groups must be truly comparable. If you test negatives only on your best-performing campaign while leaving your worst-performing campaign as the control, you're introducing confounding variables that invalidate your results. Match performance levels, seasonality exposure, and audience characteristics as closely as possible.

Step 3: Determining Sample Size and Test Duration

One of the most common mistakes in PPC testing is ending experiments too early. You check results after three days, see a positive trend, and declare victory. But statistical significance requires adequate sample size, and sample size requires time.

The minimum sample size depends on your baseline conversion rate and the minimum detectable effect you want to identify. For most negative keyword tests, you need at least 100 conversions in each group to detect a 20% performance change with 95% confidence. If your campaign generates 10 conversions per week, that means running your test for at least 10 weeks per group—20 weeks total if you're running sequential tests.

Beyond raw conversion volume, consider business cycles. If you're testing during a period that includes both high and low demand days, you need to run your test long enough to capture complete cycles. For most businesses, this means minimum test durations of 2-4 weeks, and often 4-8 weeks for campaigns with lower volume or higher variance.

Use statistical significance calculators before launching your test to determine required sample sizes. Input your baseline conversion rate, the minimum improvement you want to detect, and your desired confidence level (typically 95%). This tells you exactly how long you need to run the test based on your traffic volume. Don't check results daily and stop when you see significance—predetermine your test duration and stick to it to avoid p-hacking and false positives.

Five A/B Testing Methodologies for Negative Keywords

Different testing scenarios require different methodological approaches. Here are five proven frameworks for validating negative keyword strategies, each suited to specific testing objectives and account structures.

Methodology 1: Category-Level Exclusion Testing

This approach tests entire categories of terms rather than individual keywords. It's ideal when you have clear hypotheses about broad term types that might be wasting budget.

Identify a category of search terms that appears consistently in your search term reports but seems low-intent. Common examples include informational modifiers ("what is," "how to," "definition"), comparison terms ("vs," "versus," "compared to"), or price-focused queries ("cheap," "affordable," "discount"). Compile a comprehensive list of terms in this category—for price-focused testing, this might include 20-30 variations of budget-related language.

Create your control and treatment campaigns with identical settings. In the treatment campaign, add your entire category list as negative keywords using appropriate match types (typically phrase or broad match to capture variations). Run the test for your predetermined duration, tracking not just cost and conversion metrics but also impression share and auction competitiveness.

The analysis goes deeper than simple ROAS comparison. Yes, you want to see if excluding these terms improved efficiency. But also examine what happened to overall volume. If you excluded "cheap" terms and saw ROAS improve by 25% but total conversion volume dropped by 40%, you've discovered that price-conscious searchers represent a significant portion of your convertible audience—just at different economics. This insight might lead you to create separate campaigns for budget-focused traffic with adjusted targets rather than excluding it entirely.

Methodology 2: Incremental Negative Addition Testing

Rather than testing categories, this methodology examines the incremental impact of adding specific high-volume negative keywords one at a time or in small groups. It's particularly valuable when you're uncertain about whether a term is truly irrelevant or might have hidden value.

Suppose your search term report shows "free trial" generating significant clicks but low conversions. Your hypothesis is that these users aren't ready to buy, they're just exploring. But what if some percentage of free trial seekers do convert, or what if they convert later in the customer journey? Incremental testing reveals the true impact.

Set up three campaign versions: Control (no negative), Treatment A ("free trial" as phrase match negative), and Treatment B ("free trial" and related terms like "trial period," "try before buy" as negatives). Split traffic evenly across all three. This multi-variant approach shows both the isolated impact of the primary term and the cumulative effect of expanding the exclusion.

After running for sufficient duration, you might discover that excluding "free trial" alone improved ROAS by 12% with minimal volume impact, but expanding to related terms pushed ROAS up only an additional 3% while cutting conversions by 18%. This tells you the optimal stopping point—add the core term but leave the variations active. These nuanced insights are impossible without proper measurement frameworks that track impact precisely.

Methodology 3: Match Type Variation Testing

The same negative keyword applied with different match types creates dramatically different exclusion patterns. This methodology tests whether your negatives should be scalpels (exact match) or sledgehammers (broad match), and everything in between.

Consider the negative keyword "jobs." As exact match, it only blocks the exact term "jobs." As phrase match, it blocks "marketing jobs," "jobs in Seattle," and any query with "jobs" as a complete word. As broad match, it blocks "job opportunities," "career openings," "employment positions," and any query Google's algorithm determines is related to job seeking. The impact difference is enormous.

Create three treatment groups plus one control: Control (no negative), Treatment A (exact match), Treatment B (phrase match), and Treatment C (broad match). Use the same negative keywords across all treatment groups—only the match type varies. This isolates match type as the single variable.

Track search term reports carefully during this test. You're not just measuring aggregate performance—you need to see which specific queries each match type blocked. Treatment B might show the best efficiency gains, but when you examine the search term report, you discover it blocked some unexpected variations that actually converted well in the control group. This might lead you to use phrase match for most negatives but add back specific valuable variations as positive keywords or use exact match negatives for terms with legitimate alternate meanings.

This testing methodology is essential for developing sophisticated negative keyword strategies that balance protection and opportunity. The findings from match type testing inform your entire approach to data-driven negative keyword list construction across all your campaigns.

Methodology 4: Competitive Term Isolation Testing

Competitor terms deserve special testing attention because the decision to bid on or exclude them involves complex strategic considerations beyond simple ROAS math. This methodology isolates competitor traffic to understand its true value and characteristics.

Your search term report shows clicks on "[Competitor Name] alternative," "[Competitor Name] vs," and "[Competitor Name] pricing." These terms might have lower conversion rates than your brand terms, but they represent high-intent users actively evaluating solutions. The question isn't just "Do they convert?" but "What's their lifetime value? How does their acquisition cost compare to other channels? What's the strategic value of appearing in competitive searches?"

Create a dedicated campaign structure: Campaign A includes your standard keywords without competitor terms (these go as negatives). Campaign B targets only competitor-related queries with specific competitor keyword targeting. Campaign C is your control—broad match or phrase match keywords that might trigger for competitor queries, with no competitor-specific negatives or targeting. This three-way split shows the performance of deliberately targeted competitor traffic versus incidentally captured competitor traffic versus excluding it entirely.

Extend your analysis beyond immediate ROAS. Track these conversions separately in your CRM to measure differences in customer lifetime value, retention rates, and expansion revenue. You might discover that competitor comparison traffic converts at 60% the rate of branded traffic but generates customers with 140% higher LTV because they're more sophisticated buyers who've already evaluated alternatives. This transforms the strategic calculus entirely.

Methodology 5: Seasonal Variation Testing

Search intent changes with seasons, events, and market conditions. A term that's irrelevant in February might be valuable in November. This methodology tests whether your negative keyword strategy should vary seasonally.

Consider "gift" as a potential negative keyword for a B2B software company. For most of the year, "project management software gift" is probably someone looking for a free giveaway or a literal gift item, not a qualified buyer. But in November and December, some percentage of these searches represent corporate gift licenses or end-of-year budget spending. Should you exclude "gift" year-round, seasonally, or not at all?

Run parallel tests during different seasons with identical methodology. In Q1, test your campaigns with "gift" and related terms as negatives versus control. Track performance. Then in Q4, run the identical test structure with the same negative keywords. Compare not just whether the negatives improved performance, but whether the magnitude and direction of impact changed seasonally.

This approach builds a seasonal negative keyword calendar. You might discover that excluding job-related terms is valuable year-round except during peak hiring seasons in your industry when those searches spike with different intent. Or that price-focused terms perform better during economic uncertainty when budget consciousness is heightened even among qualified buyers. These insights enable dynamic negative keyword strategies that adapt to changing search behavior patterns throughout the year.

Building Your Measurement and Analysis Framework

Rigorous testing requires rigorous measurement. Your analysis framework must go beyond surface-level metrics to capture the full impact of negative keyword changes, including effects that appear in unexpected places.

Primary Performance Metrics

Start with the fundamental metrics that directly measure negative keyword impact. These are your first-order effects—the immediate, observable changes that result from excluding search traffic.

Wasted spend reduction is the most obvious metric. Calculate the cost of clicks on terms that never converted in your control group, then measure how much of that spend was eliminated in your treatment group. But don't stop at simple cost savings. Calculate wasted spend as a percentage of total spend to understand efficiency improvement, and track whether the saved budget was reallocated effectively to higher-performing terms.

Conversion rate changes reveal whether you're successfully filtering low-intent traffic. If your treatment group shows a 15% higher conversion rate, your negatives are working. But segment this analysis by conversion type. Did form fills increase while phone calls decrease? Did free trial signups improve but paid conversions stay flat? These patterns tell you what type of intent you're filtering and whether it aligns with your actual business goals.

Cost per acquisition and ROAS are ultimate efficiency measures, but interpret them carefully in the context of volume. A 30% ROAS improvement means nothing if you achieved it by cutting 70% of your conversions. Calculate efficiency-volume tradeoff curves to identify the optimal point between efficiency and scale. This is where comprehensive health score frameworks become invaluable for balancing multiple objectives.

Secondary and Diagnostic Metrics

The most valuable insights from negative keyword testing often come from secondary metrics that reveal unintended consequences and hidden opportunities.

Impression share changes indicate how negatives affect your auction participation. If excluding certain terms increased your impression share on remaining queries, your budget is now concentrated on higher-value auctions. But if impression share dropped unexpectedly, you might have blocked terms that were triggering for valuable queries you didn't anticipate. Review the auction insights report to see if competitive dynamics shifted.

Quality Score improvements can result from better traffic filtering. When you exclude irrelevant searches, your CTR improves on the remaining impressions, which signals relevance to Google's algorithm and can improve Quality Scores. Track keyword-level Quality Score changes throughout your test period. A 1-point Quality Score improvement can reduce CPCs by 10-20%, creating compounding value beyond the direct impact of excluding wasteful terms.

Search term diversity metrics reveal whether your negatives are over-restricting your reach. Count unique search terms triggering your ads in control versus treatment groups. If your treatment group shows 40% fewer unique terms, you're significantly narrowing your reach. This might be intentional and valuable, or it might indicate you're missing long-tail variations with hidden value. Examine the terms that appeared in control but not treatment—are they all obviously irrelevant, or did you exclude some potential opportunity?

Statistical Rigor in Analysis

Proper statistical analysis separates real performance changes from random variance. This is where many PPC managers fall short, declaring success based on trends that aren't actually statistically significant.

Calculate statistical significance for all primary metrics using appropriate tests. For conversion rate differences, use a two-proportion z-test. For ROAS and CPA changes, use t-tests for means. Don't rely on platform-reported confidence levels alone—calculate them independently using your raw data to ensure accuracy. Require 95% confidence (p-value < 0.05) before declaring a result significant, and consider using 99% confidence for major strategic decisions.

When running multiple comparisons (testing several negative keyword groups simultaneously), adjust for multiple testing using Bonferroni correction or similar methods. If you're testing five different negative keyword categories, your significance threshold should be 0.05/5 = 0.01 to maintain overall 95% confidence. Without this adjustment, you'll identify false positives—negative keyword changes that appear to work but are actually random variation.

Report confidence intervals, not just point estimates. Instead of saying "Treatment group had 4.2% conversion rate versus 3.8% control," report "Treatment group conversion rate was 4.2% (95% CI: 3.9%-4.5%) versus control 3.8% (95% CI: 3.5%-4.1%)." This reveals whether the difference is meaningful. If the confidence intervals overlap substantially, the observed difference might not be real.

Advanced Testing Techniques for Sophisticated Accounts

Once you've mastered basic A/B testing frameworks, these advanced techniques enable more nuanced insights and faster optimization cycles.

Sequential Testing and Early Stopping Rules

Traditional A/B testing requires running your experiment for a predetermined duration regardless of results. Sequential testing allows you to check results at predetermined intervals and stop early if you reach conclusive results, saving time and budget while maintaining statistical validity.

Define your early stopping rules before launching the test. For example: "We'll check results every 7 days. If at any checkpoint we observe a statistically significant difference with p < 0.01 and at least 50 conversions per group, we'll conclude the test. If we haven't reached significance by day 56, we'll conclude the test is inconclusive." These predefined rules prevent p-hacking while enabling faster iteration.

Use sequential probability ratio tests (SPRT) or similar Bayesian approaches designed for early stopping. These methods adjust the significance threshold at each checkpoint to account for multiple looks at the data, maintaining overall statistical validity. Several online calculators and tools implement these methods specifically for conversion rate testing.

Multi-Armed Bandit Algorithms

While traditional A/B tests split traffic evenly between control and treatment, multi-armed bandit algorithms dynamically shift more traffic to better-performing variations as the test progresses. This reduces the opportunity cost of testing and can find winners faster.

For negative keyword testing, this might mean starting with four different negative keyword list variations (conservative, moderate, aggressive, and control). The algorithm initially sends 25% of traffic to each. As data accumulates, it begins favoring the variation with the best performance, eventually sending 70-80% of traffic to the winner while maintaining smaller percentages on other variations to ensure the winner remains optimal as conditions change.

The tradeoff is complexity and somewhat less clean statistical interpretation. Bandit algorithms are better suited to ongoing optimization than to answering specific yes/no questions. Use them when you want to continuously improve performance rather than validate specific hypotheses about negative keyword categories.

Holdout Groups for Long-Term Validation

After implementing negative keywords based on test results, maintain a small holdout group (5-10% of traffic) that continues running without those negatives. This ongoing validation detects if the performance impact changes over time or if your initial test results don't hold up at scale.

When you roll out winning negative keyword changes to your main campaigns, create a small parallel campaign with identical settings except no negative keywords. Give it 5-10% of your daily budget. Monitor this holdout campaign continuously. If it starts outperforming your main campaigns, you know search behavior or intent patterns have shifted and your negative keywords need reevaluation.

This approach catches performance degradation early. Search intent changes seasonally, competitively, and as your market matures. Negative keywords that worked brilliantly in Q1 might be blocking valuable traffic by Q4. Holdout groups serve as an early warning system, triggering retests before performance significantly degrades. This is particularly valuable for understanding how search intent evolves and ensuring your negative keyword strategy adapts accordingly.

Common Testing Pitfalls and How to Avoid Them

Even experienced PPC managers make critical mistakes in negative keyword testing. Recognizing these pitfalls before you encounter them saves budget and prevents false conclusions.

Pitfall 1: Insufficient Traffic Volume

The most common mistake is testing in campaigns with too little traffic to reach statistical significance in reasonable timeframes. If your campaign generates 5 conversions per month, you'll need nearly two years to reach 100 conversions per test group. By that time, market conditions will have changed so dramatically that your results are meaningless.

Before launching a test, calculate required sample size and estimate time to achieve it. If the timeline exceeds 8-12 weeks, either expand the test scope to include multiple similar campaigns (increasing volume), or use directional testing with clearly documented limitations rather than claiming statistical certainty. Alternatively, focus your testing efforts on higher-volume campaigns and apply learnings cautiously to lower-volume campaigns.

Pitfall 2: Test Group Contamination

Contamination occurs when your control and treatment groups aren't truly independent. The most common form: running duplicate campaigns that compete in the same auctions. If both your control and treatment campaigns target the same keywords in the same locations at the same times, they're bidding against each other, artificially inflating costs for both groups and invalidating your results.

Use Google's Campaign Experiments feature for true cookie-based splitting, which ensures each user only sees one version. If that's not possible, implement strict separation: geographic splits (control runs in western states, treatment in eastern states), time-based splits (control runs Monday/Wednesday/Friday, treatment runs Tuesday/Thursday/Saturday), or account-level splits for agencies testing across multiple similar clients. Never run competing campaigns in the same auctions simultaneously.

Pitfall 3: Confounding Variables

Confounding occurs when you change multiple variables simultaneously, making it impossible to attribute results to your negative keywords. If you add negative keywords AND adjust bids AND launch new ad copy during your test period, you can't know which change drove the performance difference.

Implement change freezes during testing periods. Document that during your 6-week negative keyword test, no other optimizations will be made to the test campaigns. If urgent changes are necessary (budget adjustments for unexpected performance spikes, pausing due to website outage), apply them equally to both control and treatment groups and document the change as a potential confounding factor in your analysis.

Pitfall 4: The Peeking Problem

Peeking means checking your test results repeatedly and stopping the test as soon as you see statistical significance. This dramatically increases false positive rates because random variance creates temporary significant results that disappear if you keep testing. If you check results daily for 30 days and stop the first time you see p < 0.05, your actual false positive rate is much higher than 5%.

Set predetermined test duration and analysis schedules before launching. Commit to running the test for the full planned duration regardless of interim results. If you must check early results (for budget protection or stakeholder updates), use sequential testing methods with adjusted significance thresholds, or calculate Bayesian credible intervals that account for interim monitoring. Never make the stopping decision based on "it looks good" after three days.

Your Step-by-Step Testing Workflow

Here's a practical workflow for implementing rigorous negative keyword testing, from hypothesis to scaled implementation.

Phase 1: Hypothesis Development and Planning (Week 1)

Begin by analyzing your search term reports to identify testing opportunities. Export 3-6 months of search term data and calculate performance metrics for different categories. Look for patterns: Are informational queries underperforming? Are competitor terms converting differently than expected? Are there obvious wasteful terms consuming significant budget?

Document specific hypotheses with quantified predictions. "We hypothesize that excluding informational queries (containing 'how to,' 'what is,' 'guide to') will reduce wasted spend by $2,000-3,000 monthly (18-25% of current waste) while maintaining 95%+ of current conversion volume, because these queries show 0.8% CVR versus 3.9% account average and represent 12% of click volume but only 2.4% of conversions."

Calculate required sample size and test duration. If you need 100 conversions per group and currently generate 80 conversions weekly, you need 1.25 weeks per group, meaning 2.5 weeks total. Build in buffer for variance and round up to 4 weeks. Create a test calendar identifying start date, checkpoint dates (if using sequential testing), and predetermined end date.

Phase 2: Test Setup and Launch (Week 2)

Create your control and treatment campaign structures. If using Campaign Experiments, configure your experiment in Google Ads with 50/50 traffic split. If using manual campaign duplication, create identical campaigns and implement your splitting strategy (geographic, temporal, or account-based). Verify that all settings match exactly: same keywords, same bids, same targeting, same ad copy, same landing pages.

Apply your negative keywords only to the treatment group. Double-check match types—are you testing broad, phrase, or exact match negatives? Verify the negatives applied correctly by checking the negative keywords tab. A common mistake is adding negatives at the wrong level (account versus campaign versus ad group) or using incorrect match type syntax.

Configure tracking and reporting. Set up custom columns or reports that track your primary and secondary metrics for both control and treatment groups. Create a spreadsheet or dashboard that you'll update at predetermined intervals. Pre-build your statistical significance calculators with formulas ready—you'll just paste in data at each checkpoint.

Phase 3: Monitoring and Data Collection (Weeks 3-6)

During the test period, resist the urge to make changes. Monitor for technical issues (campaigns paused accidentally, budget constraints limiting delivery, etc.) but don't optimize. If you must make changes for business reasons, apply them identically to both groups and document them.

At predetermined checkpoints (typically weekly), collect data but don't make decisions unless using sequential testing with predefined stopping rules. Record your metrics, calculate interim statistics, but commit to your planned test duration. This discipline is what separates rigorous testing from optimization theater.

Conduct qualitative analysis of search term reports. Even though you won't end the test early, reviewing which specific terms are being blocked versus allowed in each group builds intuition for the final analysis. You might notice patterns that inform future tests or reveal unexpected interactions worth investigating.

Phase 4: Analysis and Decision Making (Week 7)

At your predetermined end date, pull final data and conduct complete statistical analysis. Calculate significance for all primary metrics. Examine confidence intervals. Check for confounding factors that might have influenced results. Review your hypothesis predictions versus actual outcomes—were your predictions accurate? This builds calibration for future hypothesis development.

Segment your analysis to uncover nuanced insights. Break down results by device, location, time of day, and audience characteristics. You might discover that your negative keywords improved desktop performance but hurt mobile performance, suggesting device-specific intent differences that warrant separate strategies.

Make your implementation decision based on complete evidence, not just statistical significance. A statistically significant 3% ROAS improvement might not be operationally meaningful if it comes with 25% volume reduction. Consider whether the results align with your strategic objectives, whether the operational complexity of implementation is justified by the benefit, and whether the results are robust across segments or dependent on specific conditions.

Phase 5: Scaled Implementation and Validation (Week 8+)

If your test showed clear positive results, implement the winning negative keyword strategy across your full campaign structure. Don't just copy-paste—thoughtfully adapt the learnings to different campaigns based on their specific characteristics. A negative keyword strategy that works for broad match campaigns might need adjustment for exact match campaigns.

Maintain your holdout group for ongoing validation. Keep 5-10% of your campaigns or budget running without the new negatives. Monitor this holdout continuously. Set up alerts that trigger if the holdout begins outperforming your main campaigns by more than 10%, indicating that your negative keyword strategy might need reevaluation.

Document learnings and queue next tests. Every test answers some questions and raises new ones. Your informational query exclusion test might reveal that comparison terms deserve their own test. Build a testing roadmap that systematically validates and optimizes every aspect of your negative keyword strategy over time. This transforms testing from one-off experiments into a systematic optimization engine.

From Testing to Continuous Optimization

Scientific A/B testing methodologies transform negative keyword management from reactive cleanup to proactive optimization. Instead of adding negatives when you notice wasteful terms, you're systematically testing hypotheses about search intent, validating strategies with statistical rigor, and building an evidence-based framework that gets smarter with every test cycle.

The benefits extend beyond immediate performance improvements. Rigorous testing builds organizational capability and confidence. You can defend your optimization decisions with data rather than opinions. You can identify best practices that scale across accounts. You can detect when strategies stop working before they significantly damage performance. You develop intuition calibrated by evidence rather than assumptions.

Start with one well-designed test rather than trying to test everything simultaneously. Choose a high-impact hypothesis where you have sufficient traffic volume to reach conclusions in reasonable timeframes. Execute the testing workflow rigorously, resist the urge to peek and adjust, and conduct thorough analysis. That first successful test builds the foundation for systematic optimization that compounds over time.

The negative keyword testing lab isn't a destination—it's a discipline. By treating your optimization strategies as hypotheses requiring validation rather than assumptions requiring implementation, you build campaigns that improve continuously based on evidence rather than guesswork. That's the difference between hoping your negative keywords are working and proving they are.

The Negative Keyword Testing Lab: Scientific A/B Testing Methodologies That Prove What's Actually Working

Discover more about high-performance web design. Follow us on Twitter and Instagram