PPC & Google Ads Strategies

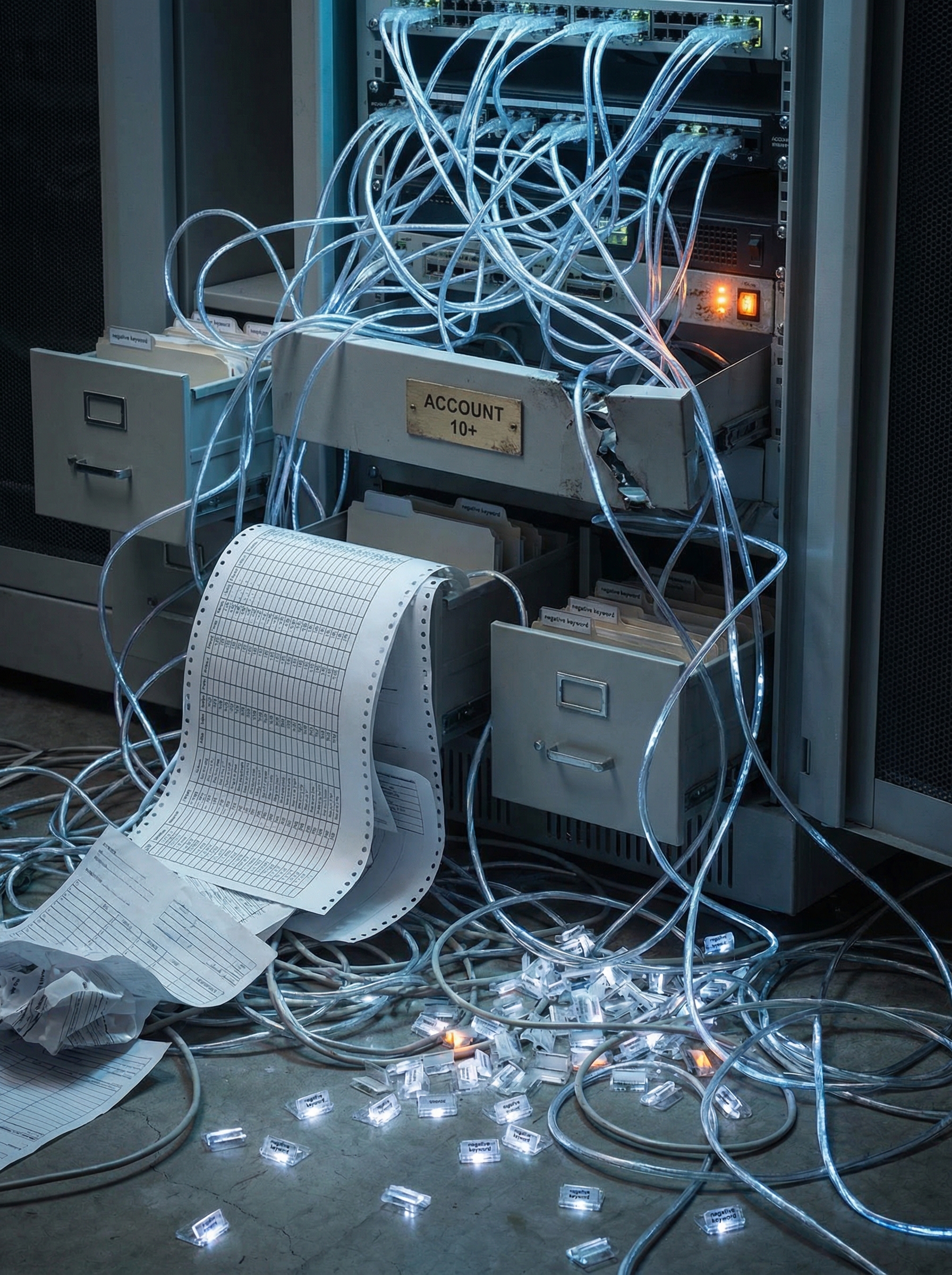

The Manual Negative Keyword Trap: Why Spreadsheet-Based Workflows Break at 10+ Accounts

For PPC agencies managing a handful of client accounts, spreadsheet-based negative keyword workflows might seem manageable. Then you hit 10 accounts, and suddenly what worked for three clients becomes a productivity nightmare that consumes entire days and leaves critical optimization opportunities buried in unreviewed data.

The Breaking Point: When Manual Workflows Stop Scaling

For PPC agencies managing a handful of client accounts, spreadsheet-based negative keyword workflows might seem manageable. You download search term reports, filter columns in Excel, cross-reference existing negative lists, and upload exclusions manually. The process is tedious but functional. Then you hit 10 accounts. Then 15. Then 20. Suddenly, what worked for three clients becomes a productivity nightmare that consumes entire days and leaves critical optimization opportunities buried in unreviewed data.

This is the manual negative keyword trap. It's the point where spreadsheet-based workflows break under their own weight, where the time required to review search terms exceeds the time available, and where agencies face a choice: sacrifice quality, burn out the team, or find a better system. According to research on agency scalability, most agencies use multiple disconnected systems that create data fragmentation, making manual processes even more inefficient as client counts grow.

This article examines why spreadsheet-based negative keyword management fails at scale, the hidden costs of manual workflows, and how agencies can transition to scalable systems that maintain optimization quality across dozens of accounts without burning out their PPC teams.

Why Spreadsheets Work Initially (And Why That's Deceptive)

When you're managing 3-5 Google Ads accounts, spreadsheet-based negative keyword workflows deliver results. You download search term reports from each account, consolidate them in Excel or Google Sheets, apply filters to identify irrelevant queries, and upload negative keyword lists manually. The process takes 2-3 hours per account per week—manageable for a small team.

Spreadsheets offer several advantages at this scale: complete visibility into every search term, full control over exclusion decisions, no additional software costs, and the ability to customize workflows to each client's unique needs. For agencies with deep expertise and limited account loads, this manual approach can produce excellent results.

But this early success is deceptive. Spreadsheet workflows scale linearly with account count—10 accounts require roughly double the time of 5 accounts. Meanwhile, the complexity increases exponentially. Each account has different business contexts, keyword strategies, and exclusion priorities that can't be standardized into simple spreadsheet formulas. What felt efficient at 5 accounts becomes unsustainable at 15.

The 10-Account Threshold: Where Manual Workflows Break Down

The 10-account threshold isn't arbitrary. It represents the point where manual negative keyword management requires more time than most PPC managers have available, forcing compromises that reduce optimization quality across all accounts. Here's why this threshold matters and what breaks first.

The Time Mathematics of Manual Review

Consider the actual time requirements: A typical Google Ads account generates 200-500 unique search terms per week that require review. At 10 accounts, that's 2,000-5,000 terms weekly. Even at an aggressive 5 seconds per term (barely enough to read the query and make a decision), you're looking at 2.7 to 6.9 hours just reviewing search terms—before any analysis, cross-referencing, or upload work.

Add in the hidden time costs: downloading reports from each account (10-15 minutes), consolidating data into workable spreadsheets (15-20 minutes), cross-referencing against existing negative lists to avoid duplicates (20-30 minutes), formatting for upload (10-15 minutes), and actually uploading to each account (15-20 minutes). Suddenly you're at 10-12 hours per week minimum just for negative keyword management across 10 accounts.

That's 25-30% of a full-time PPC manager's week devoted entirely to one maintenance task. For agencies managing 50+ client accounts, the mathematics become impossible without massive compromises.

The Context Switching Penalty

Spreadsheet workflows force constant context switching between accounts. You're not just reviewing search terms—you're mentally shifting between an e-commerce client selling luxury furniture, a B2B SaaS company, a local service business, and a healthcare provider. Each requires different judgment about what constitutes an irrelevant search.

This cognitive load increases error rates and slows decision-making. A search term like "cheap options" might be irrelevant for the luxury furniture client but valuable for the budget SaaS provider. Making these judgments accurately requires holding each client's business context, keyword strategy, and campaign goals in your head simultaneously—a mental burden that grows exponentially with account count.

The result is quality degradation. Managers start using shortcuts, applying broader exclusions than necessary, or worse—skipping review cycles entirely for lower-spending accounts. The accounts that need optimization most (the struggling ones) often receive the least attention because they don't justify the time investment in a manual workflow.

Version Control and Data Fragmentation

Spreadsheet-based workflows create version control nightmares. You have a master negative keyword list for Client A in one file, search term reports from three different date ranges in others, uploaded negatives in Google Ads that might not match your spreadsheet, and team members making updates without clear change tracking.

This fragmentation makes it impossible to answer basic questions: What negative keywords are currently active? When were they added? Which search terms triggered their addition? What's the historical performance impact? According to agency reporting best practices research, data fragmentation across disconnected systems is one of the primary obstacles to scaling client services effectively.

Without clear audit trails, you can't learn from past decisions or systematically improve your exclusion strategies. Each account becomes a black box of accumulated manual changes with no institutional knowledge about why specific decisions were made.

The Hidden Costs: What Spreadsheet Workflows Actually Cost Agencies

The direct time costs of manual negative keyword management are obvious. But spreadsheet workflows carry hidden costs that don't show up in time tracking—costs that compound as agencies scale and ultimately limit growth potential.

Opportunity Cost: Strategic Work Displaced by Manual Tasks

Every hour spent reviewing search terms in spreadsheets is an hour not spent on strategic work that actually grows accounts: testing new campaign structures, analyzing competitive dynamics, developing creative strategies, or conducting quarterly business reviews with clients. For PPC managers capable of driving 20-35% ROAS improvements through strategic optimization, spending 30% of their time on manual data processing represents massive opportunity cost.

Agencies compound this problem by assigning highly skilled PPC strategists to spreadsheet work because they're the only ones with judgment to make good exclusion decisions. This misallocates your most valuable talent to your lowest-value activities—a productivity trap that prevents both individual and agency growth.

Error Rates and Compounding Waste

Manual workflows introduce error rates that accumulate over time. You accidentally block a valuable search term, missing the subtle difference between "business software" and "business software training." Or you miss an expensive irrelevant term buried in row 847 of a 1,200-row spreadsheet. These errors are inevitable at scale—human attention simply can't maintain perfect accuracy across thousands of weekly decisions.

The impact compounds: A blocked valuable term might cost you $200 in missed conversions per month. An unblocked wasteful term might cost $150 in irrelevant clicks. Across 10 accounts with 5-10 errors each, you're looking at $2,000-$5,000 in monthly waste or missed opportunity—directly attributable to manual workflow limitations.

This matters particularly because the average advertiser already wastes 15-30% of their Google Ads budget on irrelevant clicks, according to industry benchmarks. Manual workflows that introduce additional errors make this problem worse, not better. For agencies managing multi-client workflows, reducing this waste systematically is critical to demonstrating value.

Client Churn from Inconsistent Optimization

Spreadsheet workflows make it nearly impossible to deliver consistent optimization quality across all accounts. Your largest clients receive thorough weekly reviews because they justify the time investment. Smaller accounts get reviewed monthly, if that. Medium-sized accounts fall somewhere in between, depending on who has capacity that week.

Clients notice these inconsistencies. When one account receives detailed negative keyword reports and measurable waste reduction while another seems neglected, it creates perception problems that drive churn. Even if smaller accounts don't justify intensive manual optimization economically, the perception of unequal service quality damages agency relationships and limits expansion opportunities.

The economics of client retention make this particularly costly. Acquiring a new client typically costs 5-7x more than retaining an existing one. Losing even 2-3 small accounts annually to perceived service inconsistency erodes profitability significantly—costs that don't show up in time tracking but directly impact agency growth.

What Breaks First: The Failure Modes of Manual Workflows

When spreadsheet-based negative keyword workflows reach their breaking point, they don't fail catastrophically—they degrade gradually. Understanding these failure modes helps agencies recognize when they've hit the manual workflow ceiling and need to transition to scalable systems.

Review Frequency Degrades from Weekly to Monthly (or Never)

The first compromise most agencies make is reducing review frequency. Weekly search term reviews become biweekly, then monthly, then "when we have time." This degradation happens quietly—no single decision to stop optimizing, just a gradual drift toward lower maintenance as time pressures mount.

The impact is measurable: A search term generating $10 in daily wasted spend costs $70 if caught in a weekly review, $300 if caught monthly, and $1,200+ if caught quarterly. Across 10 accounts, degraded review frequency easily adds $5,000-$10,000 in monthly waste that could have been prevented with consistent optimization.

Agencies often shift to reactive optimization—only reviewing accounts when clients complain about performance or when obvious waste shows up in dashboard alerts. This reactive approach catches the most egregious problems but misses the accumulated waste from hundreds of smaller irrelevant terms that aren't individually significant but collectively drain budgets.

Over-Standardization: Applying Broad Rules That Don't Fit Every Client

To manage time constraints, agencies create standardized negative keyword lists applied across multiple accounts: industry-specific lists, generic waste term lists, competitor name lists. These standardized approaches save time but sacrifice the business-context awareness that makes negative keyword optimization effective.

A SaaS company might legitimately want traffic for "free software" because they offer a freemium model. A luxury brand doesn't. Applying standardized exclusions ignores these critical differences, potentially blocking valuable traffic for some clients while leaving others exposed to waste. The efficiency gained through standardization gets offset by reduced optimization quality.

This is precisely why context-aware AI systems like AI-powered negative keyword classification provide value—they maintain business-specific context at scale without requiring manual customization for each account. The system learns from each account's keywords and business profile to make intelligent suggestions rather than applying generic rules.

Documentation and Institutional Knowledge Evaporate

Under time pressure, documentation is the first casualty. You stop tracking why specific terms were excluded, what search term patterns indicate problems, or what historical decisions inform current strategy. Knowledge lives in individual team members' heads rather than in accessible systems.

When that team member leaves or moves to a different role, institutional knowledge evaporates. The new manager inheriting the account has no context for existing negative keyword lists, no historical data about what's been tried, and no systematic way to learn from past optimization cycles. They essentially start from zero, often repeating mistakes that were already solved.

This knowledge fragmentation creates a permanent scalability barrier. Agencies can't systematically train new team members, can't develop replicable best practices, and can't build compounding expertise over time. Each account remains a unique snowflake requiring deep individual familiarity rather than a standardized workflow that can scale.

False Solutions: Common Workarounds That Don't Actually Scale

When agencies hit the manual workflow ceiling, they typically try several workarounds before accepting that spreadsheet-based processes fundamentally can't scale. Understanding why these false solutions fail saves time and accelerates the transition to effective systems.

Hiring More People: The Linear Scaling Trap

The most common response to manual workflow overload is hiring additional team members. If one PPC manager can effectively handle 10 accounts with spreadsheet workflows, surely two managers can handle 20. The mathematics seem simple: scale linearly by adding headcount.

But this solution ignores the coordination costs that grow exponentially with team size. Two managers need to align on exclusion strategies, avoid duplicating work, share learnings across accounts, and maintain consistent quality standards. Three managers need even more coordination. Five managers require formal process documentation, quality assurance systems, and management overhead that erodes the efficiency gains from additional headcount.

More critically, this approach destroys profitability. If manual workflows require one full-time manager per 10 accounts, your labor costs scale directly with client count. There's no operational leverage, no efficiency improvement over time, and no path to profitable growth. You're building a services business with permanently high labor costs rather than a scalable agency model. Research on PPC workflow management shows that agencies achieving scale use standardized, technology-enabled processes rather than simply adding headcount.

Google Sheets Macros and Scripts: Band-Aids on Broken Systems

Another common workaround is building custom Google Sheets macros, Google Ads scripts, or Excel automation to streamline parts of the manual workflow. These tools can download search term reports automatically, apply basic filters, or flag obvious waste terms for review.

These solutions help at the margins but don't address the core problem: they still require manual judgment on thousands of individual search terms, still create version control challenges, and still demand extensive human review time. You've automated the data collection but not the analysis or decision-making—the parts that actually consume time at scale.

Moreover, custom scripts create maintenance burdens. Google Ads API changes break your scripts. Team members who didn't write the original code can't modify it. Edge cases that weren't anticipated in the original logic create errors. Instead of solving your scalability problem, you've added a custom codebase to maintain alongside your existing workload.

Offshore Outsourcing: Judgment Lost in Translation

Some agencies attempt to outsource manual search term review to lower-cost offshore teams. The logic is appealing: if the work is primarily data processing, why not hire less expensive labor to handle the spreadsheet work while your senior team focuses on strategy?

This approach fails because effective negative keyword management requires business judgment, not just data processing. Determining whether "affordable luxury watches" is relevant for a mid-tier jewelry client requires understanding their positioning, pricing strategy, and competitive context. Offshore reviewers working from generic guidelines make poor decisions that either block valuable traffic or allow expensive waste.

The quality control overhead required to make outsourcing work—detailed guidelines, extensive training, ongoing review of decisions, error correction—often exceeds the time savings. You've traded direct work for management work without actually reducing the total time burden or improving scalability.

The Transition: Moving from Manual Workflows to Scalable Systems

Recognizing that spreadsheet workflows can't scale is the first step. The second is understanding what scalable systems actually look like and how to transition without disrupting client performance during the migration.

What Scalable Systems Require: Context-Aware Automation, Not Just Rules

Scalable negative keyword management requires more than simple automation—it requires context-aware systems that apply business judgment at scale. Rule-based automation ("exclude all terms containing 'free'") creates as many problems as it solves by blocking valuable traffic without understanding business context.

Context-aware AI systems like Negator analyze search terms using multiple signals: your active keywords, your business profile, industry patterns, and search intent indicators. This allows the system to understand that "free trial software" might be valuable for a SaaS company offering free trials but irrelevant for an enterprise software provider with no freemium model. The same term receives different treatment based on business context rather than generic rules.

Critically, scalable systems maintain human oversight while automating analysis. Negator makes suggestions rather than automated decisions—you review AI-classified terms before uploading, maintaining control while dramatically reducing review time. This hybrid approach delivers the time savings of automation with the quality assurance of human judgment. According to research on scalable campaign management, negative keywords are essential infrastructure for scaling Google Ads campaigns without proportionally increasing wasted spend.

Multi-Account Architecture: Why MCC Integration Matters

For agencies managing 10+ accounts, direct MCC (My Client Center) integration is non-negotiable. Systems that require exporting data from each individual account, processing it separately, and uploading results individually don't solve the scalability problem—they just digitize the same manual workflow.

Proper MCC integration allows you to analyze search terms across all client accounts in a unified interface, apply account-specific business context automatically, review and approve negative keyword suggestions in bulk, and track historical decisions and performance impact systematically. This architecture reduces 10-12 hours of weekly work to 2-3 hours while actually improving decision quality through better data organization and context management.

Negator's MCC integration enables agencies to scale negative keyword management from one account to 50+ without proportionally increasing time investment. The system handles data collection, contextual analysis, and intelligent classification across all accounts while presenting consolidated results for efficient human review and approval.

Built-In Safeguards: Why Protected Keywords Matter

One reason agencies resist moving away from manual spreadsheet workflows is fear of accidentally blocking valuable traffic through automated processes. This concern is legitimate—over-aggressive negative keyword automation can do more harm than good by excluding high-intent searches.

Scalable systems address this through built-in safeguards like protected keywords. You designate specific terms that should never be excluded (brand names, core product terms, high-converting phrases), and the system automatically prevents these from being suggested as negatives even if they appear in irrelevant search queries. This safety net allows you to leverage automation confidently without constant worry about blocking valuable traffic.

These safeguards improve over time as the system learns from your approval patterns. If you consistently reject certain types of suggestions, the AI adjusts its classification logic to reduce similar recommendations. You're not just using a static tool—you're building a system that becomes more accurate and aligned with your judgment over time.

Implementation Roadmap: How to Transition Without Disrupting Performance

Moving from spreadsheet-based workflows to scalable systems doesn't require a disruptive overnight transition. Successful agencies use a phased approach that validates the new system while maintaining existing quality standards.

Phase 1: Pilot with High-Volume Accounts

Start by implementing scalable systems on 2-3 high-volume accounts that currently consume the most manual review time. These accounts provide sufficient data volume to properly evaluate system performance while representing a manageable transition scope.

Run the new system in parallel with your existing spreadsheet workflow for the first 2-3 weeks. Review search terms using both methods and compare results: how many irrelevant terms did each approach identify? Were there any valuable terms the AI system suggested excluding that you would have kept? Were there waste terms you caught manually that the system missed?

Track specific validation metrics: time spent reviewing each account, number of negative keywords added, estimated waste prevented, and any quality issues or missed opportunities. This data-driven approach builds confidence in the new system while identifying any adjustments needed before broader rollout.

Phase 2: Expand to Full Client Portfolio

Once the pilot validates system performance, expand to your full client portfolio in waves. Add 3-5 accounts weekly rather than migrating all accounts simultaneously. This staged rollout allows you to refine processes, train team members gradually, and maintain quality control throughout the transition.

Communicate changes to clients proactively. Explain that you're implementing AI-powered tools to improve negative keyword optimization, allowing more thorough and consistent analysis across all accounts. Frame this as a service enhancement that provides better protection against wasted spend—which it is—rather than a cost-cutting measure.

Reassign team members' time from manual data processing to higher-value activities: strategic campaign development, competitive analysis, creative testing, and client relationship building. The goal isn't to reduce headcount but to redirect existing talent toward work that actually grows accounts rather than just maintaining them.

Phase 3: Optimize Workflows and Build Institutional Knowledge

With scalable systems in place, document standardized workflows that leverage automation effectively: weekly review schedules, approval processes, escalation procedures for ambiguous terms, and reporting formats for client communication. These documented processes enable consistent execution across your team and facilitate onboarding new members.

Build institutional knowledge systematically by analyzing patterns across accounts: which types of search terms consistently indicate waste across industries? What business profile characteristics predict which exclusions will be most impactful? How do negative keyword strategies need to evolve as accounts mature from launch to scale?

This accumulated knowledge becomes a competitive advantage that compounds over time. You're not just maintaining accounts more efficiently—you're developing expertise that improves outcomes for every client and differentiates your agency's capabilities in a competitive market.

Quantifying the Impact: What Agencies Gain by Abandoning Spreadsheets

The benefits of transitioning from manual spreadsheet workflows to scalable systems aren't just theoretical—they're measurable in both time savings and financial performance.

Time Recovery: From 10-12 Hours Weekly to 2-3 Hours

Agencies using Negator typically save 10+ hours per week on negative keyword management across 10-15 accounts—a 75-80% reduction in time spent on search term review. This isn't time saved by doing less work or reducing quality; it's time saved by eliminating manual data processing while maintaining or improving decision quality through context-aware AI analysis.

For a PPC manager billing at $150/hour (a conservative agency rate), 10 hours weekly represents $1,500 in recovered billable time or $78,000 annually. That recovered capacity can be redirected to strategic work that actually grows client accounts rather than just maintaining them—work that generates additional revenue and improves client retention.

Performance Improvement: 20-35% ROAS Increases Within First Month

Beyond time savings, scalable negative keyword management delivers measurable performance improvements. Agencies using AI-powered systems typically see 20-35% ROAS improvement within the first month as accumulated waste gets systematically eliminated and campaigns focus on high-intent traffic.

For a client spending $10,000 monthly on Google Ads with typical 20% waste ($2,000 in irrelevant clicks), effective negative keyword optimization recovers $1,500-$1,800 of that waste monthly. Across 10 clients, that's $15,000-$18,000 in monthly waste prevented—waste that either improves client results or allows budget reallocation to higher-performing campaigns.

These measurable improvements directly impact client retention and expansion. When you can demonstrate clear waste reduction and ROAS improvement through better negative keyword hygiene, clients increase budgets and renew contracts. The agencies that scale successfully are the ones that can deliver consistent optimization quality across all accounts regardless of size—exactly what scalable systems enable.

Capacity Growth: Managing 50+ Accounts Without Proportional Headcount Increases

The ultimate measure of scalable systems is operational leverage: the ability to grow revenue without proportionally increasing costs. With spreadsheet workflows, agencies hit a hard ceiling around 10-15 accounts per manager. With scalable systems, that capacity increases to 30-50 accounts per manager while maintaining or improving optimization quality.

This operational leverage transforms agency profitability. Instead of adding one full-time manager for every 10 new clients, you add one manager for every 30-40 new clients. The difference represents pure margin expansion—revenue growth that flows directly to profit rather than being consumed by proportional cost increases.

Agencies that successfully transition from manual workflows to scalable systems report fundamentally different growth trajectories: faster client acquisition (because they can actually handle new accounts), higher retention (because optimization quality remains consistent across all clients), and improved profitability (because operational leverage allows margin expansion as they scale). These aren't incremental improvements—they're transformational changes in agency economics.

Breaking Free from the Manual Trap

The manual negative keyword trap isn't a failure of effort or expertise—it's a fundamental limitation of spreadsheet-based workflows that can't scale beyond 10-15 accounts without sacrificing quality, burning out teams, or destroying profitability. Agencies that recognize this limitation and transition to context-aware, scalable systems gain measurable advantages in time efficiency, client performance, and growth capacity.

Negator enables this transition by combining AI-powered search term classification with business context awareness, multi-account MCC integration, and built-in safeguards like protected keywords. The result is a system that maintains human judgment and control while eliminating the manual data processing that prevents agencies from scaling effectively. See how Negator integrates into agency workflows to transform negative keyword management from a time-consuming bottleneck into a systematic competitive advantage.

The choice isn't between manual control and risky automation—it's between workflows that break under growth and systems that scale with your agency. For PPC teams managing 10+ accounts, the question isn't whether to abandon spreadsheet-based workflows, but how quickly you can make the transition without disrupting client performance. The agencies that answer that question decisively are the ones that scale successfully while others remain trapped in manual processes that consume their growth potential.

The Manual Negative Keyword Trap: Why Spreadsheet-Based Workflows Break at 10+ Accounts

Discover more about high-performance web design. Follow us on Twitter and Instagram